They are sorted by increasing the compression ratio using plain CSVs as a baseline. I have dataset, lets call it product on HDFS which was imported using Sqoop ImportTool as-parquet-file using codec snappy.As result of import, I have 100 files with total 46.

SNAPPY COMPRESSION FORMAT HOW TO

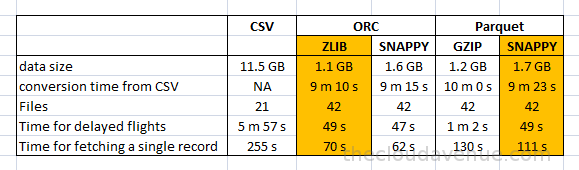

Using a sample of 35 random symbols with only integers, here are the aggregate data sizes under various storage formats and compression codecs on Windows. Commmunity Please help me understand how to get better compression ratio with Spark Let me describe case: 1. Both took a similar amount of time for the compression, but Parquet files are more easily ingested by Hadoop HDFS. This document only describes the format, not how the Snappy compressor nor: decompressor actually works. There is no entropy encoder backend nor framing layer - the latter is: assumed to be handled by other parts of the system. Compressed CSVs achieved a 78% compression. Snappy is a LZ77-type compressor with a fixed, byte-oriented encoding. Parquet v2 with internal GZip achieved an impressive 83% compression on my real data and achieved an extra 10 GB in savings over compressed CSVs. Snappy.NET is a P/Invoke wrapper around native Snappy, which additionally. My goal this weekend is to experiment with and implement a compact and efficient data transport format. Snappy is an extremely fast compressor (250MB/s) and decompressor (500MB/s). I have an experimental cluster computer running Spark, but I also have access to AWS ML tools, as well as partners with their own ML tools and environments (TensorFlow, Keras, etc.). My financial time-series data is currently collected and stored in hundreds of gigabytes of SQLite files on non-clustered, RAIDed Linux machines. Goal: Efficiently transport integer-based financial time-series data to dedicated machines and research partners by experimenting with the smallest data transport format(s) among Avro, Parquet, and compressed CSVs. However, when writing to an ORC file, the service chooses ZLIB, which is the default for ORC. The SNAPPY files may have a lower compression rate than other programs, meaning that the compressed files of Snappy are larger than those created by d other. This means that if data is loaded into Big SQL using either the LOAD HADOOP or INSERTSELECT commands, then SNAPPY compression is enabled by default. It uses the compression codec is in the metadata to read the data. By default Big SQL will use SNAPPY compression when writing into Parquet tables.

SNAPPY COMPRESSION FORMAT FULL

When JSON, unloads to a JSON file with each line containing a JSON object, representing a full record in the query result. For more information about Apache Parquet format, see Parquet. The service supports reading data from ORC file in any of these compressed formats. By default, each row group is compressed using SNAPPY compression. SNAPPY Compression algorithm that is part of the Lempel-Ziv 77 (LZ7) family. crc is based on the user supplied input data int crc32c = calculateCRC32C(input, offset, length) ORC file has three compression-related options: NONE, ZLIB, SNAPPY. The Snappy bitstream format is stable and will not change between versions. Stable: Over the last few years, Snappy has compressed and decompressed petabytes of data in Google's production environment.

You can choose different parquet backends, and have the option of compression. CREATE TABLE newtable WITH ( format Parquet. The default compression for Parquet is GZIP. The following example specifies that data in the table newtable be stored in Parquet format and use Snappy compression. This function writes the dataframe as a parquet file. For information about the compression formats that each file format supports, see Athena compression support. Write a DataFrame to the binary parquet format. */ private void writeCompressed( byte input, int offset, int length) Snappy has the following properties: Fast: Compression speeds at 250 MB/sec and beyond, with no assembler code. DataFrame.toparquet(pathNone, engine'auto', compression'snappy', indexNone, partitioncolsNone, storageoptionsNone, kwargs) source. Image Compression Algorithm in Rust - New lossless image compression. * offset The offset into input where the data starts. snap - Snappy compression implemented in Rust (including the Snappy frame format). SNAPPY files are a compressed file format developed by Google, where the SNAPPY file is created by the SNAPPY program, which is a file compression and. * * input The byte containing the raw data to be compressed. Boolean evaluate(Tuple tuple, ImmutableBytesWritable ptr) to * actually write the frame.

0 kommentar(er)

0 kommentar(er)